How nVidia SLI Works

There are two rendering methods that nVidia uses in their SLI configurations. The first is called Split Frame Rendering (SFR), and it divides the screen horizontally into two parts, letting each card render a separate portion of the screen. The primary GPU is responsible for the top half of the video frame, while the secondary GPU is responsible for the lower half of the video frame.

The second SLI rendering method is called Alternate Frame Rendering (AFR). Under this method, the video cards take turns rendering sequential frames. For instance, card one would handle frames 1, 3, and 5, while video card two would handle frames 2, 4, and 6. This allows each card to spend about double the normal amount of time to render a single video frame.

After the primary GPU knows which rendering method it is going to be using, as directed by the SLI ForceWare drivers, the CPU sends the vertex point data (line endpoint coordinates in geometry) for a single video frame to the primary GPU for rendering calculations over the PCI Express bus. The drivers determine how the primary GPU then delegates a workload to the secondary GPU. The data is then sent over the SLI bridge (The SLI connector is commonly referred to as a bridge when speaking of actually transferring data). Once each GPU has its graphical data to render, they begin rendering and all communication between the video cards occurs over the SLI bridge, while all traffic pertaining to that specific video frame ceases to travel on the PCI Express bus.

When the secondary GPU is done rendering its frame, the image is sent from the frame buffer (RAM allocated to hold the graphics information for a single frame/image), over the SLI bridge, and to the master GPU’s buffer. If rendering with SFR, it is combined with the primary card’s image in the frame buffer. Finally, the complete frame is then sent as output to the display.

By default, you do not get to choose which method to use, and on top of that, the choice is not determined on the fly in order to offer the best optimal performance for a specific machine. nVidia has profiled a list of over 300 popular games where the default rendering method is specified for each game. For instance, games that use slow motion special effects would not be able to use AFR because of the strange frame rate timings, renderings and alpha blending of one frame on top of another. So, logically, the default mode for that game would be SFR (As a side note, any games that use any sort of blending or other dependencies between frames make AFR incompatible with the game).

If the game has not been profiled, then the SLI drivers default the rendering mode back to single-GPU rendering to avoid encountering any rendering issues. However, if you so choose, you can force a rendering method on the game and make a custom profile for it, though this process is not exactly intuitive with the current release of the ForceWare drivers. Note that choosing a rendering mode will take some trial and error to find settings that will work correctly with the game. What it all boils down to is whether or not the game can actually handle being forced to undergo an SLI rendering method. After all, you can’t expect all current and past games to fully support this new technology. nVidia is currently making moves with many of the major game makers to allow for SLI support for future games. Game profiling is horribly inefficient for gaming as a whole, but is a relatively quick and dirty way to handle rendering mode selection in a pinch for most of the popular games on the market.

In any case, it appears that nVidia’s preferred method for rendering is AFR because of higher rendering performance, even though SFR offers load balancing, and appears to offer a better input response time for gamers. With AFR, latency has the opportunity to arise between user input and the video display as frames are being rendered in the alternating fashion, which is compounded with lower framerates, should this situation occur. With SFR, there are no latency issues, and the input/output response time is the same as it would be on a single-GPU system. All the rendering method does is render the frame and go; one after another. This makes data synchronization relatively easier than with AFR because SFR doesn’t have to worry about frame timings.

As for the resource sharing argument; because both video cards work together on the same frame with SFR, resources can be shared (textures, shading and light calculations, etc) between the two pieces. With AFR, each frame has its own set of resources to work with in order to render each separate frame. That is not a fair comparison, seeing as SFR is compared with only one frame, while AFR is compared with two. In other words, the performance gain either way when referring to shared resources is, in fact, negligible.

In the act of SFR’s frame splitting for workload dispersal, there is a significant amount of overhead generated in the calculations. These calculations serve two purposes. First, it’s to ensure all of the geometrical faces and textures line up when the frame is split. Second, they ensure that the workload is split in half evenly, depending on the complexity of the frame. For example, if the bottom portion of a frame is full of complex geometric shapes, while the upper portion of the frame merely displays a flat sky, the frame would be unevenly split visually to allow the mathematical rendering calculations to be distributed evenly between the two GPUs. This is the reason why SFR does not return a fully doubled performance increase over two video cards. The calculations for each frame split take a small bite out of the overall rendering performance. For this reason, nVidia has opted to select AFR as its default rendering method because it offers better rendering times.

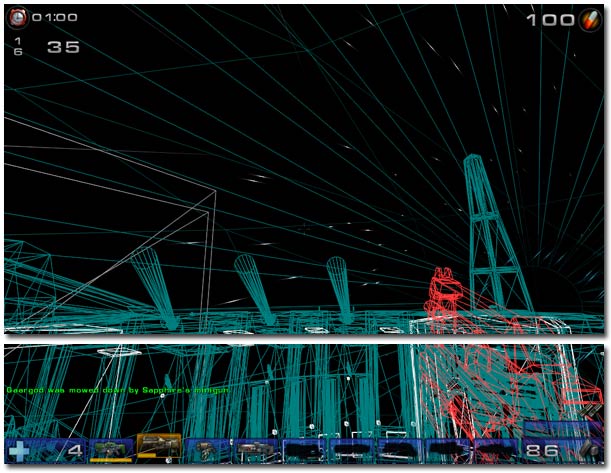

Here’s a screenshot from UT2004 in wireframe mode, which is split horizontally to help illustrate the idea of a weighted workload under SFR:

Notice that although the frame is split unevenly visually, the geometric rendering workload is split evenly.

Notice that although the frame is split unevenly visually, the geometric rendering workload is split evenly.

All of these calculations for SFR are performed by the nVidia ForceWare SLI drivers. The calculations for the workload allocation is primarily determined by comparing the GPU rendering load for the past several frames. Based on this stored history, the drivers make a prediction on where the split should be made in the frame. If the prediction was not accurate, one GPU will finish its rendering task sooner than the other GPU, making the GPU that was finished first idle and wait for the other one to finish its task. A faulty prediction will hurt overall performance.

With AFR rendering, there is no overhead. The full frames are prepared by the CPU, managed by the ForceWare drivers, then send to the next available GPU. The GPU receives the rendering data the same way a single-GPU system would, renders the frame, buffers the frame, and sends it to the primary GPU to be buffered sequentially, then sent as output.

There is one large caveat in the AFR system. If the first frame in a sequence has not yet completed rendering, but the second in line has been, the CPU sends prepares a frame for the inactive GPU, and the completed frame is swapped out of the rendering buffer and into the primary card’s buffer. The CPU sends the frame data to the ForceWare drivers, the drivers send the frame data to the idling GPU, and the GPU begins rendering that frame, all while the second frame is still waiting for the first frame to complete rendering. Once the first frame has been completed, it is sent as output, followed by the second frame, and finally the third frame when it is ready.

This is how the latency issue becomes a problem with AFR. While the frames are being held up as described above, the user input signals have time to change. So when the output is finally displayed, the old input data is being displayed, while the new input data is still being rendered. This is seen as lag, which can be visually equated to lag that can be exhibited while playing online multiplayer games with slow Internet connections, or just plain high latency for various other reasons. This latency issue with AFR can usually be averted by lowering the gaming resolution, AA, and AF, but if it’s still a problem, eye candy in the game needs to be lowered.

If you have ever attempted to play Tomb Raider: Angel of Darkness, that is a prime example of an extremely high user input delay. Another example, albeit not with so drastic of a delay, is Indiana Jones and the Infernal Machine. If the latency problems in those games were found in fast-paced action games, they would certainly put you at a serious disadvantage. That is one of the main issues with sometimes running AFR.

Each rendering method has their strengths and weaknesses, as compared above. That is why nVidia has chosen to pre-test and profile each game in order to determine what the best rendering method for each one, depending on resource requirements (and other factors). Again, it is a quick and dirty solution, but it is sufficient for a good number of the more popular games on the market.

AdamTheTech.com and respective content is Copyright 2003-2025.

AdamTheTech.com and respective content is Copyright 2003-2025.